[Update: Peerage of science (and myself) has started to use the hashtag #ProRev]

You know I am worried about the current status of the review process, mainly because is one of the pillars of science. There are tons of things we can do in the long run to enhance it, but I come up with a little thing we can do right now to complement the actual process. The basic idea is to give the opportunity to reviewers to be proactive and state its interest to review a paper on a given topic when they have the time. How? via twitter. Editors (like i will be doing from @iBartomeus) can ask for it using a hashtag (#ProactiveReviewers). For example:

“Anyone interested in review a paper on this cool subject for whatever awesome journal? #ProactiveReviwers”

If you are interested and have the time, just reply to the twit, or sent me an email/DM if you are concerned about privacy.

The rules: is not binding. 1) I can choose not to send it to you, for example if there are conflict of interests. 2) you can choose not to accept it once you read the full abstract.

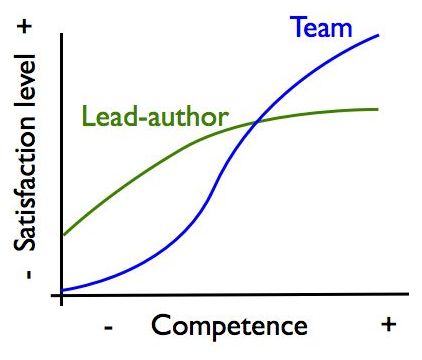

Why the hell should I, as a reviewer, want to volunteer? I already got a 100 invitations that I had to decline!

Well, fair enough, here is the main reasons:

Because you believe being proactive helps speed up the process and you are interested in making publishing as faster as possible. Matching reviewers interests and availability will be faster done that way than sending an invitation one by one to people the editor think may be interested (for availability there is not even a guess).

Some extra reasons:

– Timing: Because you received 10 invitations to review last month, when you had this grant deadline and couldn’t accept any, and now that you have “time” you want to review but invitations don’t come.

– Interests: Because you only receive invitations to review stuff related to your past work, but you want to actually review things about your current interests.

– Get in the loop: Because you are finishing your PhD and want to gain experience reviewing, but you don’t get the invitations yet.

– Because you want the “token” that some Journals give in appreciation (i.e. Ecology Letters gives you free subscription for reviewing for them).

– Because you want to submit your work to a given Journal and want to see how the review process work first hand.

So, is this going to work? I don’t know, but if a few editors start using it, the hashtag #ProactiveReviewer can become one more tool. Small changes can be powerful.

It was written in 1920’s and is surprisingly modern. He makes a strong argument to let the data talk for your science and he make some very relevant points against the inclusion of honorary authors. I also love his steps to write a paper:

It was written in 1920’s and is surprisingly modern. He makes a strong argument to let the data talk for your science and he make some very relevant points against the inclusion of honorary authors. I also love his steps to write a paper: